Environment Sensors: From Vision to Perception

How data fusion gives vehicles a seventh sense

- Continental offers leading technologies for assisted and automated driving, such as radar, lidar and camera. These sensors act as a kind of vehicle's eyes, gathering environment information.

- By fusing sensor data, the vehicle can gain an overview of the entire traffic situation, obtain an understanding of the scene, and accurately anticipate potential hazards. Performance and safety of the systems increase. Continental is relying on a mix of classic methods and artificial intelligence (AI).

- The degree of automation of vehicles will continue to increase in the coming years and autonomous driving will become suitable for mass use. From 2025, level 3 and level 4 systems will be available to the mass-market at affordable cost.

How can safety and assistance systems reliably detect hazards and obstacles? Continental relies on a "From Vision to Perception" approach. Vehicles should not only be able to see situations in traffic (vision), but also understand them (perception). To give vehicles something like a seventh sense, to enable them to interpret even complex traffic scenarios and act accordingly, a wide variety of sensor data is fused together. Artificial intelligence helps in this process.

More and more complex technologies are being used in today's modern vehicles. Today, there are already numerous safety and assistance systems that use sensors to collect information about the surroundings and thus help the driver keep his distance, stay in his lane or brake in time (level 2). As the level of automation increases, so does the number of sensors - and therefore data that is collected and interpreted.

The sensors have very different strengths, and only the combination of the various technologies results in a complete and robust recording of the environment, which is absolutely necessary for higher levels of automation.

Using sensors to assess the risk of crashes

But there is much more to the technology: Thanks to the sensors that act as its eyes, the vehicle can interpret the behavior of other road users very well. In other words, how great the risk of a crash is, whether a collision is imminent or whether it can be assumed that a pedestrian will stop shortly or crosses the street.

"While a radar can detect distances to and movements of objects very precisely, the data fusion of radar and camera allows further details to be detected. For example, whether they are people, cyclists or strollers and how they behave. Is the pedestrian paying attention when crossing the street? Is he looking in the direction of the vehicle and traffic, or is he distracted? There is enormous potential here through the combination of sensor fusion and artificial intelligence," says Dr. Sascha Semmler, Head of Program Management Camera at Continental's Advanced Driver Assistance Systems (ADAS) business unit.

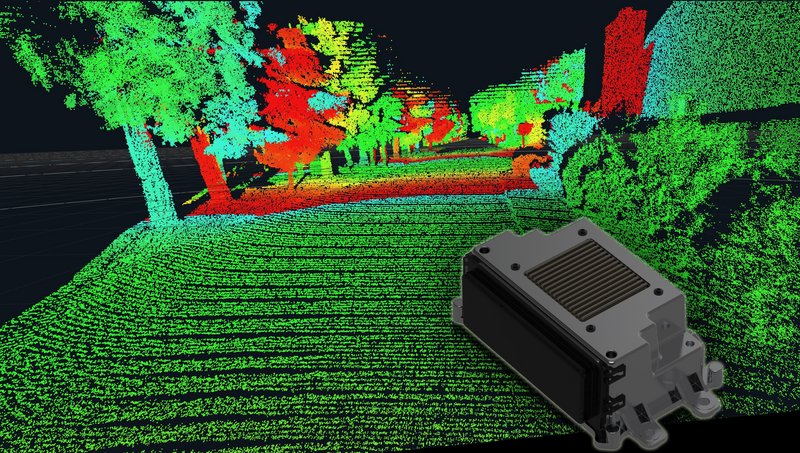

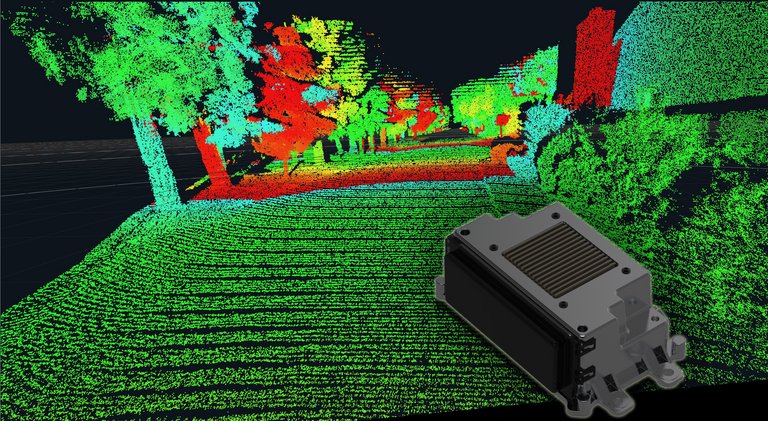

LiDAR as a third element of environment perception

In particularly challenging situations with poor visibility or darkness, for example, LiDAR supplements environment perception as a third element. Based on 25 years of LiDAR experience, Continental relies on a combination of short- and long-range LiDAR. "This year, our short-range 3D flash LiDAR technology will go into production with a premium OEM. Together with our partner AEye, we are developing a high-performance long-range LiDAR that can reliably detect vehicles at a distance of more than 300 meters, regardless of visibility conditions. This puts us in an excellent position to capture the entire vehicle environment with state-of-the-art LiDAR technology, enabling automated driving at SAE level 3 and higher, both for passenger cars and in truck applications," explains Dr. Gunnar Juergens, head of the LiDAR segment at Continental's Advanced Driver Assistance Systems (ADAS) business unit.

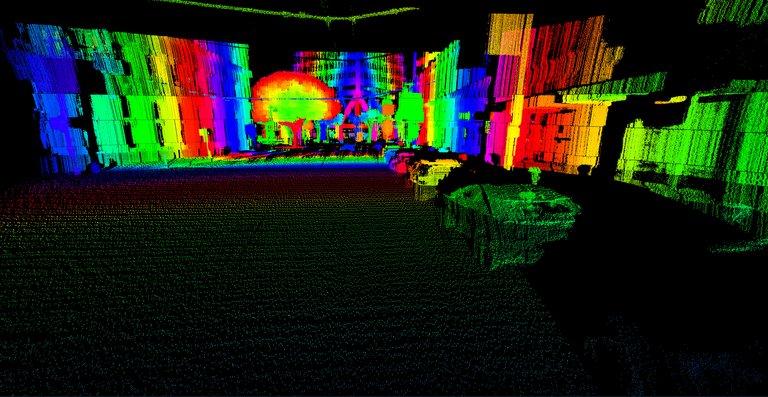

Bringing sensor data together through high- and low-level fusion

Continental uses different approaches for object detection: high-level fusion, mid-level fusion and low-level fusion. In high-level fusion, environment detection is first performed separately for each individual sensor - for example, detection of objects or the course of the road. These separate sensor results are then fused and used in the respective driving function. This classic approach has been used successfully for many years and has a high degree of maturity. But for the future, and especially for autonomous driving, low-level fusion or its combination with high-level fusion is of fundamental importance. Here, the raw sensor data is transmitted directly to a powerful central computer, where it is fused and only then the overall image will be interpreted. The result: significantly higher system performance and "scene comprehension" in which the vehicle correlates road users, the course of the road, buildings, etc. and offers a wide range of possibilities.

Recognizing the environment in detail and interpreting traffic scenarios

"Low-level fusion is our strength, which we are using to drive the development of automated and autonomous driving systems at Level 3 and 4," explains Dr. Ismail Dagli, head of Research and Development at Continental's Advanced Driver Assistance Systems (ADAS) business unit. "Recognizing the environment in such detail was not possible for vehicles before. Especially by using artificial intelligence in data fusion and situation interpretation, the vehicle knows what's going to happen, can draw better conclusions and perform maneuvers." Currently, systems that use low-level fusion are already in use in certain areas. By 2025, the Continental expert estimates, these systems could also be in mass production - paving the way for cost-effective L3 and L4 systems.

The new approach to low-level fusion is made possible thanks to artificial intelligence. Intelligent algorithms and neural networks take over what classical data processing methods can no longer do: They help classify and interpret complex driving situations that can no longer be programmed using classic methods. In the process, the algorithms evaluate large volumes of data within fractions of a second and train the systems for later use. AI also makes it easier to incorporate new functions into technologies. In the case of new developments, such as e-scooters suddenly appearing more frequently in traffic, the systems can be retrained and adapted via over-the-air updates. Continental is continuously expanding its competencies in the field of AI. Strategic partnerships are increasing the speed of innovation in this area.

Gaining trust by recognizing and understanding information

"Advanced driver assistance systems, as they are already used today in assisted driving, so-called Level 2, are an important step on the way to automated driving, Level 4," explains Dr. Ismail Dagli, head of Research and Development at Continental's Advanced Driver Assistance Systems (ADAS) business unit. "It is extremely important that these technologies work reliably and thus gain the trust of all of us." As the level of automation in vehicles increases, so does the number of sensors. While a handful are still enough at Level 2, the next evolutionary stage already has two to five times as many. That's where AI-based data fusion is essential to obtain a complete and correct picture of the traffic situation. "In the future, the systems will take over more and more tasks from the driver. He is still fully in charge, has the final say with regards to the safety systems and has to check whether they are correct. That will change in the next few years."