Robocar Programming: 7 Functions We Implemented

On October 27th we successfully completed the final version of the Robocar. In total, 20 of our employees participated in the project.

Starting September 1st, we continuously added new functions to our Robocar. During this process, we learned the basics of software development and control theory. In this entry, I will briefly outline:

- What we implemented

- Our thoughts on the future of the automotive industry

What We Implemented

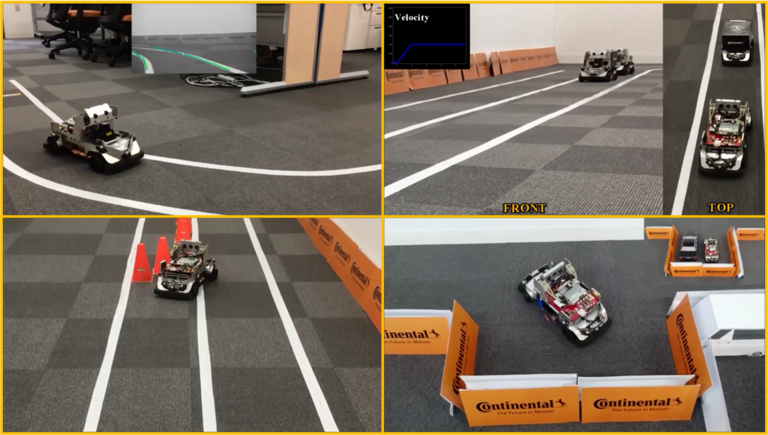

At first, we integrated 4 basic functions. These functions are:

- Lane Keep

- Cruise Control

- Obstacle Avoidance

- Parking

The sensors we used include stereo cameras, infrared radar, and laser distance sensors. One of the major difficulties that we came across is the the car’s measurement of its own location. In case of lane keep or cruise control, the location can be estimated based on the lane keep. This does not work with obstacle avoidance and parking. Here, the robocar’s location needs to be estimated based on relative position data.

After having solved this problem, we designed additional functions. They include

- Passing

- Wall Slalom

- Pylon Slalom

Passing is an ability we integrated into the lane-keep and obstacle-avoidance functions. The obstacle-avoidance function also contains the slalom ability. This function is further part of the parking feature. We discussed many possibilities, and decided to design these functions by ourselves. (This is “freedom to act”, isn’t it? :)) By now, we understand the capability of each sensor very well, and it was a really good experience to consider the future of automotive technologies.

Our Thoughts on the Future of the Automotive Industry

When we were developing algorithms, we discussed a lot of things. But we mainly thought about the future of the automotive industry. How can automated driving be realized? The main issue of our Robocar (and actual automated driving) is image recognition. The results of recognition heavily depend on the brightness of the environment. So, the performance differs from day to day. That is why sensor fusion (a camera with a laser or radar) is important and is used in most products. But the detection accuracy is not 100% yet. To improve pedestrian detection or emergency braking, this issue should be solved as soon as possible. I don’t know what the “Silver Bullet” for this problem will look like, but we will keep trying to find a solution. For now, thank you for reading my entry!

This article was written by our employee.

Nobuto Yoshimura