Virtual Driving School: Continental Uses AI to Give Vehicle Systems Human Strength

- Pedestrian intention and gesture classification using neural networks

- Pedestrian detection using Artificial Intelligence (AI) – large-scale production use in the fifth camera generation

- Hardware and software offer everything from simple object detection to complex scene understanding using neural networks for automated driving

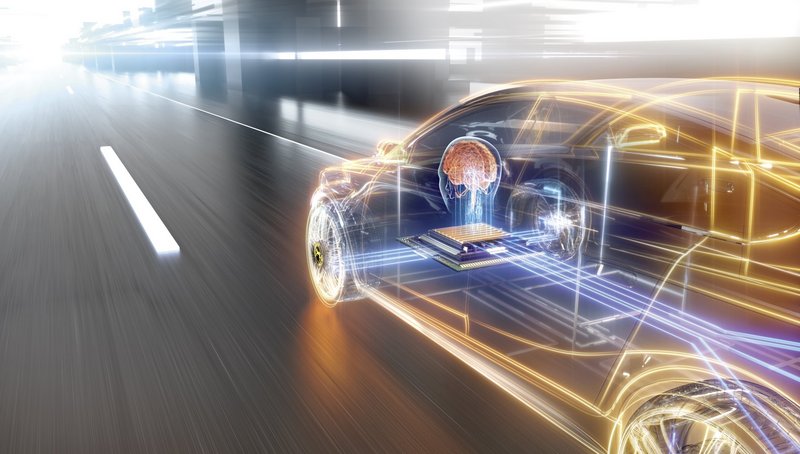

Shanghai (China), June 11, 2018. A basic requirement for sophisticated advanced driver assistance functions and automated driving is a detailed understanding and precise assessment of the entire traffic situation. To allow automated vehicles to assume control from drivers, vehicles must develop an understanding of the imminent actions of all road users, so that they can always make the right decision in different traffic situations. This task is best performed by training algorithms using deep machine learning methods. At the CES Asia, Continental will be exhibiting a computer vision platform that uses Artificial Intelligence (AI), neural networks and machine learning to improve advanced sensor technologies. Continental’s fifth generation of its multi-function camera, which will begin series production in 2020, will use neural networks alongside traditional computer vision processes. Depending on the available hardware, these can be scaled and refined, and using intelligent algorithms they improve the scene understanding involving the classification of the intentions and gestures of pedestrians.

“AI plays a large role in taking over human tasks. With AI software, the vehicle is able to interpret even complex and unforeseeable traffic situations – it’s no longer about what’s in front of me but about what could be in front of me,” says Karl Haupt, head of the Advanced Driver Assistance System business unit at Continental. “We see AI as a key technology for automated driving. AI is a part of an automotive future.” Just as drivers perceive the environment with their senses, process this information using their intelligence, make decisions and implement these using their hands and feet to operate the car, an automated vehicle must be able to do all this, too. This requires their capabilities to be at least on a par with those of humans.

Developing scene understanding and contextual knowledge

Artificial Intelligence opens up new possibilities for the computer vision platform: For example, AI can detect people and interpret their intentions and gestures. Continental uses AI to give vehicle systems human strengths. “The car should be so intelligent that it understands both its driver and its surroundings,” says Robert Thiel, head of Machine Learning in the Advanced Driver Assistance Systems business unit. An example illustrates what this means: A rule-based algorithm within an automated driving system will only react when a pedestrian actually steps onto the road. AI algorithms can even correctly detect the intentions of an approaching pedestrian in advance. In this regard, they are similar to an experienced driver who instinctively recognizes such a situation as potentially critical and prepares to brake early. It is about developing a full understanding of the scenario, with the help of which, predictions about the future can be made and reacted to accordingly.

Deep machine learning as a virtual driving school

Just like humans, AI systems have to learn new skills, humans in a driving school, AI systems using “supervised learning.” To do this, the software analyzes huge amounts of data to derive successful and unsuccessful strategies for action and later apply this learned knowledge in the vehicle. This essential ability of the algorithms to learn is continually being developed. For advanced driver assistance systems, data suitable for this kind of learning is available in the form of recorded radar and camera signals from real driving situations, for example. This huge database is a key component of the further development of AI at Continental. For example, the company relies on AI in its product development departments to perform extremely complex tasks such as pedestrian detection to learn the concrete parametrization of this design from huge quantities of data. This requires the creation of a system that combines and parametrizes the data input i.e. the millions of pixels in a camera image used for pedestrian detection. The second step involves enabling this system to search every combination of the parameters that solves the problem.

The technology company will begin large-scale production of this system with its fifth camera generation, with the previous camera generation already using deep learning approaches. Methods based on deep learning contribute to the mastering of this complexity on various levels – from vehicle surrounding monitoring to planning the driving strategy to actual control of the vehicle. Deep learning methods are also scalable, which means more data, more computing power, and therefore more performance.

Expansion of global AI activities at Continental

In 2015, Continental set up a central predevelopment department to coordinate the various research activities focusing on artificial intelligence. The technology company is collaborating with NVIDIA, Baidu and many research institutes in this field, including the University of Oxford, the Technische Universität Darmstadt and the Indian Institute of Technology Madras (India). In Budapest (Hungary), the Continental Advanced Driver Assistance Systems business unit opened a competence center for deep machine learning in May 2018. By the end of 2018, the technology company will employ around 400 engineers with special AI expertise worldwide and is looking for more talented people for product and process development in the field of artificial intelligence.

Continental will be demonstrating pedestrian intention and gesture classification at the CES Asia from June 13–15 (hall N5, stand no. 5702, Shanghai New International Expo Center).

Sören Pinkow

Media Spokesperson and Topic Manager Safety and Motion

Continental Automotive